The world around us keeps changing, and many of the changes are caused by, or related to, Information Overload and the instant access to limitless information resources. Recently I’ve come face to face with one more instance of this fact.

I’ve been programming computers – sometimes for work, but more often for fun – for over four decades. I’ve written programs on mainframes, minis and micros. I’ve done it in maybe a dozen languages, including Fortran, Algol, Assembler, BASIC, C, Forth and C++, and with the exception of the first two I always learned them on my own.

So with the COVID19 pandemic keeping me sitting at home, I decided to use the time and teach myself how to write apps for the Android platform in Java. I haven’t picked a new language for almost 20 years, but I’ve learned all these other languages – I knew how to go about it.

And then I discovered that I didn’t really know: in this new millennium learning to code is a whole different thing than I was used to.

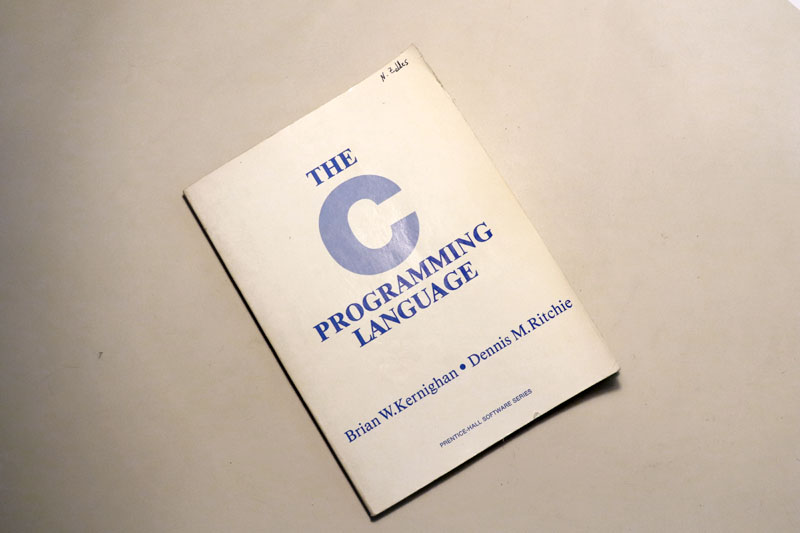

The way I did it in the past was to study the language, master it, then use it to write my code. For example, take C: I learned that terse, powerful language by reading, from cover to cover, the book known as K&R – “The C Programming Language” by Kernighan and Ritchie. It was focused, succinct, and quite easy to follow, starting with a tutorial introduction and then presenting all the elements of the language clearly and methodically: Data types, Operators, Control flow, Functions, Program structure, etc., etc. By reading the book while doing the small provided exercises you’d know the language and could get rolling writing real programs. How else could one learn a language?

So I bought a book that promised to teach me Java by writing games on Android. Sounds like fun, right? But I was in for a surprise. The book walked me through installing Android Studio, then jumped into writing a simple game. What it failed to do was to teach me the Java language, or the Android OS for that matter. Instead it kept throwing code at me, instructing me to plug it into the IDE and see the outcome. And it would make statements like this:

You do not need to understand Java classes at this point in order to write a game. Think of them as black boxes. In chapter 7, we will look into this in more detail.

Really?? You can write object oriented code without having to understand classes?!

Or this:

OK, this code you typed may seem a mystery. Try to guess what it does. It will become clear when we reach chapter 5.

So, I was being shown how to write a simple game by copying what the author had written, but I was not being systematically taught the language at all.

After a while I ditched the book and browsed the numerous resources available online, from YouTube videos to formal e-learning courses. There were plenty of those, bless them, but most of them were also focused on guiding me through developing code; explaining the philosophy and syntax of the language was not their priority.

Of course, being an engineer I’m used to making do with the available resources, so I renounced my expectations of enlightenment and started experimenting on my own, using Google to find out how things were done. I soon realized that the Android API is incredibly rich, complex, and quirky. It is also a moving target, so what works one day on one phone will crash another day or on another device. Fortunately, I also discovered Stack Overflow, an exuberant site where coders share their woes and get advice on how to fix them – a lot of advice, like half a dozen proposed code snippets that may or may not work for you, and a lively discussion about which is the best one. I’ve delighted in this sort of communal sharing ever since the early Usenet days, so I dug in and am managing just fine (and having my fun).

But looking at the situation, I’m impressed with the paradigm shift. Rather than learn coding in a systematic, formal manner, today’s coders feel quite at home in a milieu that is one huge chaotic ecosystem of trial and error, where you don’t write your code from first principles but rather collect bits and pieces of it by googling through countless sites, blogs and videos. There is also no burden on the coder to know what’s right; there are so many opinions on how anything should be done that there isn’t really a right way that stands out, just an overload of options to pick from until something ends up working well.

This new way of programming (which is certainly effective – just look at the huge number of apps out there, and at the extreme ease with which young people are writing them) is well aligned to the information environment we live in. In a world where people have access to infinite resources but a shrinking attention span, reading a book from cover to cover is barely an option, while skipping from site to site in a search results page is the natural thing to do.

And there is another thing that is no longer in fashion: up-front attention to detail. Back in the days of the mainframe you had to write your program with the greatest care, because you had to submit it to be run and wait hours or days to see the result; you double-checked your source code until you were sure it would work (and it often still didn’t, of course). But on a personal computer, you don’t even check – you run the code and see what happens, find the bugs, fix them and run again. It’s faster than proofing your work in advance. I also suspect that it contributes to a certain shallowness, a lack of rigor in writing the code, since the penalty for errors is minimized by the ability to correct and re-run at no extra cost.

Well, at least it’s still lots of fun!

Yes, Stack Overflow is invaluable. At the same time, to a certain measure this leads to programming without real understanding. https://en.wikipedia.org/wiki/Cargo_cult_programming

The other trend of which you should be aware is there are some “programming” sites that exist to sell advertising. The articles tend to be second-rate copies of information gleamed elsewhere from the web.